Here on EcoModder we see a lot of claims made about the effectiveness (or lack of effectiveness) of specific modifications. It's an understatement to say not all claims are made equal.

Just because someone says a modification worked for them, that does not make it "proof".

When looking at the claims a person or company makes about a modification, we need to understand that the the likelihood their claim is true or not is directly related to whether they know how to conduct a proper test.

So we should be

respectfully skeptical of fuel economy claims made until we know the details of how the modification was tested. We should

remain skeptical if the testing was weak or the test details aren't given.

(Note that "skepticism" doesn't equal "disbelief".)

-------------------

Lab testing is king

-------------------

Testing done on a dynamometer or in a wind tunnel is ideal, since it reduces to a minium the number of variables that can affect the outcome.

(We should be especially skeptical of companies selling supposed fuel saving products that

don't do lab testing or offer the testing details to consumers. After all, if they're convinced their product works, they should feel confident about investing in the cost of high quality testing. Wouldn't it improve the product's sales potential?)

Of course, it's still entirely possible to screw up lab testing, but it's the best starting point.

------------------------------------------------

On-road testing: the poor cousin of lab testing

------------------------------------------------

Unfortunately, not too many EcoModder members have access to chassis dynamometers or wind tunnels. This leaves on-road testing as the next option, and so right off the bat the potential quality of the results goes down.

Why? Because outside of a lab, the number of variables that can distort a test goes waaaaaay up.

That said, there are different degrees of on-road testing. It can range from truly useless "junk science" to reasonably acceptable experimentation. The difference boils down to the amount of effort made to reduce the number of variables that can influence the outcome.

---------------------------------------

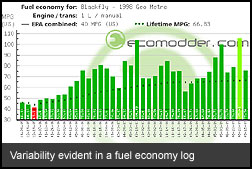

What's the big deal about variability?

---------------------------------------

In short: we need to be confident that the results we are seeing are from the modification being tested, and not from some outside factor(s).

Fuel consumption is remarkably sensitive to all kinds of variables:

- ambient temperature

- wind

- humidity

- barometric pressure

- vehicle temperature (not just engine, but the entire drivetrain including transmission, joints, bearings, tires)

- fuel grade/quality

- payload

- elevation/road grade

- road surface conditions / type

- speed

- other traffic

- driving style / driver psychology

All of these factors have an impact on fuel use through changing engine load, aerodynamic load, rolling resistance, chemical reactivity and thermodynamic efficiency.

Most

individual vehicle mods have a very small potential impact on overall fuel consumption - typically in the range of a couple of percent. So you can see how trying to conduct a test while all kinds of other variables are changing would make the results meaningless.

Not only could you end up seeing an improvement that isn't actually caused by the modification, the reverse is also true: Uncontrolled variables could also prevent an actual improvement from showing up in the data.

The reason lab testing is king is because it can eliminate the highest number of these variables from a test.

--------------------------------------------------------

How to do "as controlled as possible" on-road testing

--------------------------------------------------------

So we've established that we should be automatically skeptical of on-road testing. Just

how skeptical depends on how many variables the tester has managed to eliminate.

The further you get from this following example, the less confidence we should have in the results.

1) The vehicle should be fully warmed up, including drivetrain / tires.

2) Remove the driver's foot from the test, meaning cruise control should be used (set once, and cancelled with the brake between runs to ensure the same speed in multiple runs).

Any testing that can be affected by driver input - eg. city driving is the worst - is dramatically less scientific. A driver may unconsciously change driving style to get the desired result (

experimenter bias). An ideal experiment would be

double blind.

Failing to "remove the driver" as much as possible from the test is a huge red flag and we should be very skeptical of conclusions made.

3)

3) The route should be devoid of other traffic, to avoid significant aerodynamic impacts of vehicles ahead or overtaking.

4) Weather conditions should be as calm and stable as possible (wind gusts/changes in wind speed & temperature changes will affect results). Evening or night time testing can be preferable where atmospheric conditions are typically calmer, and there may be less traffic.

5) The route should be as flat & straight as possible.

6) Bi-directional runs should be done to average out effects of grade/wind, if present. Meaning, test on the route in both directions.

7) Use A-B-A comparisons. That means establishing a baseline (the first "A" set of runs), more test runs after making a change (the "B" set), and then additional runs after undoing the change (the last "A" set).

Why do A-B-A testing? Undoing the change and immediately re-testing the final "A" set increases confidence that any difference seen in the "B" runs was caused by the modification, and not by other uncontrolled factors.

A-B-A illustration:

(from Drive-cycle economy and emissions measurement - Fuel saving gadgets - a professional engineer's view)

Quote:

|

The data set on the left shows what would be expected from a genuine fuel saving device - economy improves when it is fitted, then worsens again when it is removed. The data set on the right shows economy improving, but then staying at this higher level when the device is removed again. The logical conclusion is that the improved economy is not due to the device, but to some other factor

|

8) A-B-A comparison runs should be done immediately one after the other to minimize the effects of changing weather conditions, vehicle temperature, weight, fuel quality, etc.

9) Be prepared to abandon runs with unexpected changes (eg. a car overtaking you, affecting aerodynamic drag)

10) The more runs the better (larger data set = higher confidence)

11) Share your raw data.

---------------------------------

Drat! Quality testing isn't easy

---------------------------------

It can actually be a big pain in the butt, and a time-eater too. (I'll often wait a week or more for calm, stable weather, and then spend 2-3 hours doing a simple A-B-A test.)

(Note, I'm not suggesting by this post that my own testing is perfect. I've done some stinker tests myself. And if I refer to the less than ideal ones, I try to point out their flaws.)

In the end, if we're not willing to do testing that's "as controlled as possible", we should have the intellectual honesty to admit that the results are questionable, and we should be wary of drawing conclusions from the data.

------

Links

------

---------------------------------------------

Topics that may be added to this post later

---------------------------------------------

- figuring out the "quality" of data gathered in a test

- examples of bad on-road testing

- explanation of why on-road testing fuel/oil additives is especialy problematic

- Other testing methods: eg. coastdown, for A-B-A of aero mods